Significantly More AI Resources

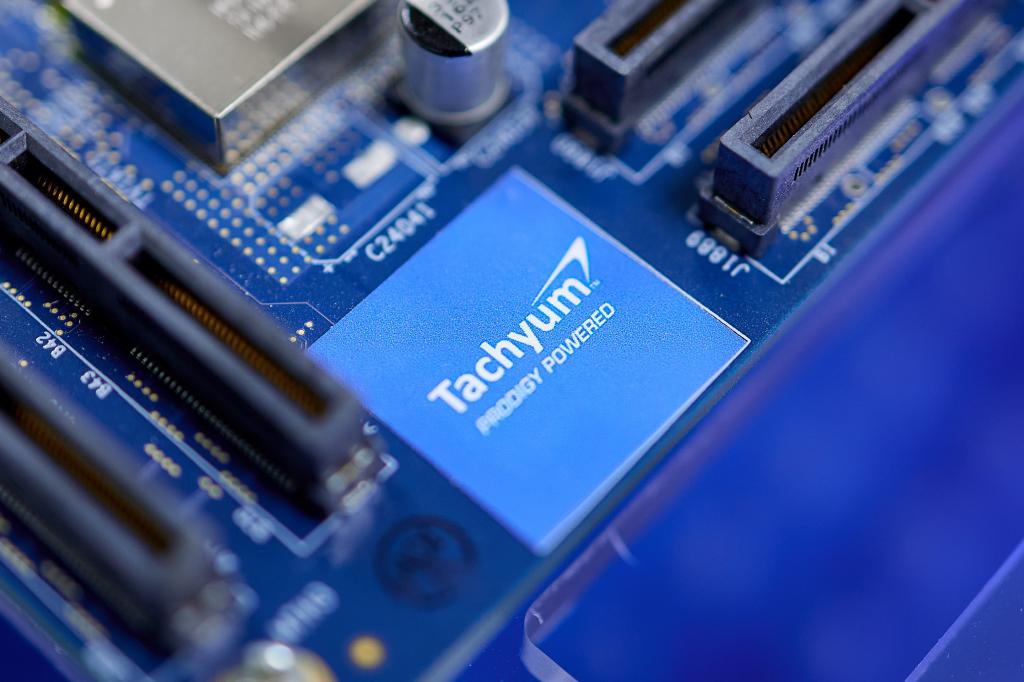

Tachyum’s Prodigy ATX Platform Democratizing AI for Everyone

Built from the ground up to provide leading-edge AI features that address the emerging demand for AI across a wide range of applications and workloads, Prodigy’s AI subsystem incorporates innovative features that deliver the high performance and efficiency required of AI environments. The white paper shows how a single Prodigy system with 1 Terabyte (TB) of memory can run a ChatGPT4 model with 1.7 trillion parameters, whereas it requires 52 NVIDIA H100 GPUs to run the same model at significantly higher cost and power consumption.

Since LLMs (Large Language Models) are so memory capacity intensive, determining the memory footprint for an LLM is critical. Just as critical is the use of the latest technology that optimizes the memory footprint for state-of-the-art LLMs, which can have trillions of parameters. Prodigy benefits from its advanced AI subsystem that supports leading-edge data types such as 4-bit TAI and effective 2-bit weights with FP8 per activation that greatly reduces the memory footprint required for LLMs.

Find out more in our ATX Platform White Paper.

Improving AI Performance for Wide Range of Disciplines

TPU® Licensable Core Bringing High Performace AI to Any Device

Tachyum’s Prodigy based TPU core will be sold as a licensable soft IP core, enabling edge computing and IOT products to directly incorporate high performance AI capabilities at a very low cost.

IOT products will have onboard high-performance AI inference engines, optimized to exploit Prodigy-based AI training from either the cloud or the home office.